📋 Project Overview

Full-stack Telegram bot providing AI image generation as a paid service. Built with SDXL Turbo on L40 GPU, featuring credit-based pricing, payment integration, and multi-tier quality options.

🔗 View on GitHub💰 Pricing Tiers

Starter

$1.20

100 Credits

~100 images

Pro

$4.80

500 Credits

~500 images

Power

$8.40

1000 Credits

~1000 images

💳 Payment via Telegram Stars | Instant credit top-up | Referral bonuses available

🛠️ Technology Stack

BackendPython, python-telegram-bot

AI ModelSDXL Turbo (Stable Diffusion XL)

GPUNVIDIA L40 (48GB VRAM)

DeploymentRailway (Bot) + VPS (GPU Inference)

NetworkingNgrok Tunnel

PaymentTelegram Stars API

State ManagementIn-memory cache (prototype) → Planned: PostgreSQL

⚡ Key Features

- ⚡ 4-second generation time (SDXL Turbo)

- 💎 Credit-based monetization ($0.01-0.12 per image)

- 📊 3 quality tiers: Standard (512x512), HD (1024x1024), 4K (2048x2048)

- 💰 Telegram Stars payment integration

- 🎁 Referral system (+20 credits per referral)

- 🎨 7 pre-built style presets (anime, cyberpunk, pixel, fantasy, etc.)

- 👤 Admin credit management system

- 🔒 Rate limiting per user + prompt validation

- 📈 Break-even: ~50 images/day covers GPU rental cost

🎨 Bot Interface & Sample Outputs

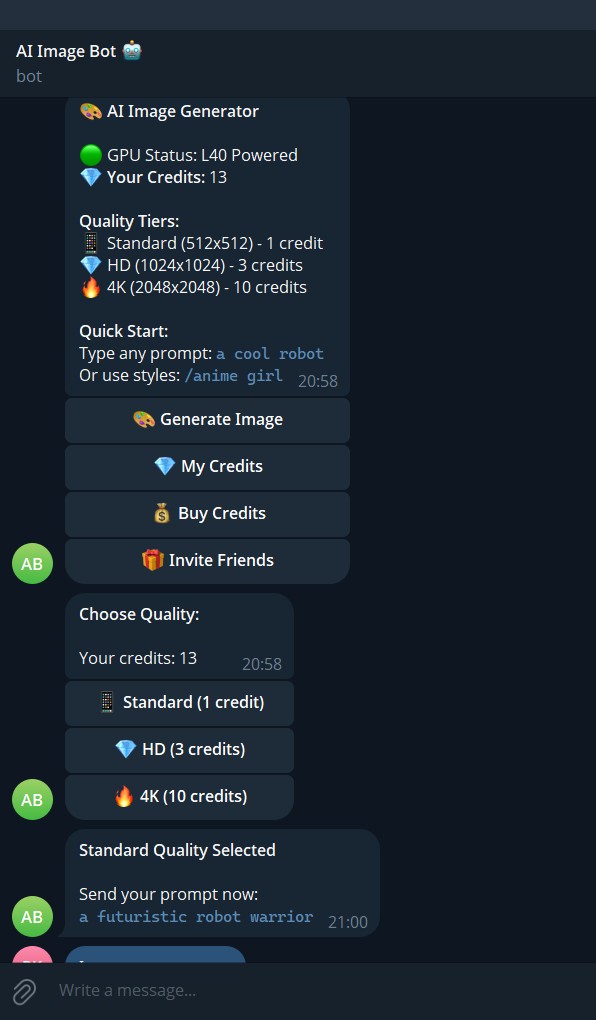

📱 User Interface

Main Menu

L40 GPU status indicator, credit balance display, quality tier selection (Standard/HD/4K)

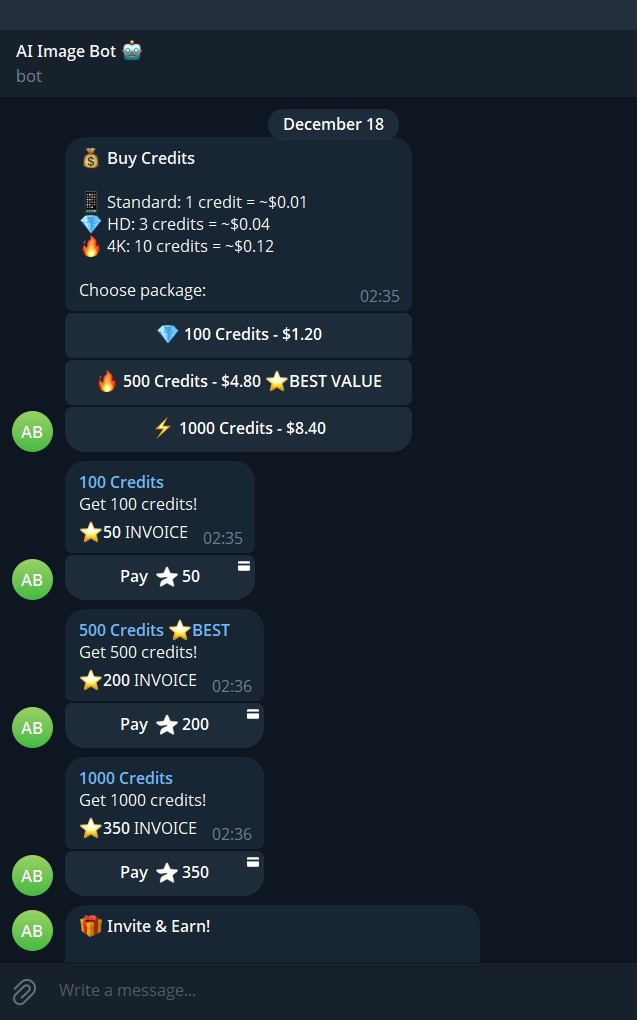

Payment Packages

Telegram Stars integration with instant credit delivery

🖼️ Generated Images

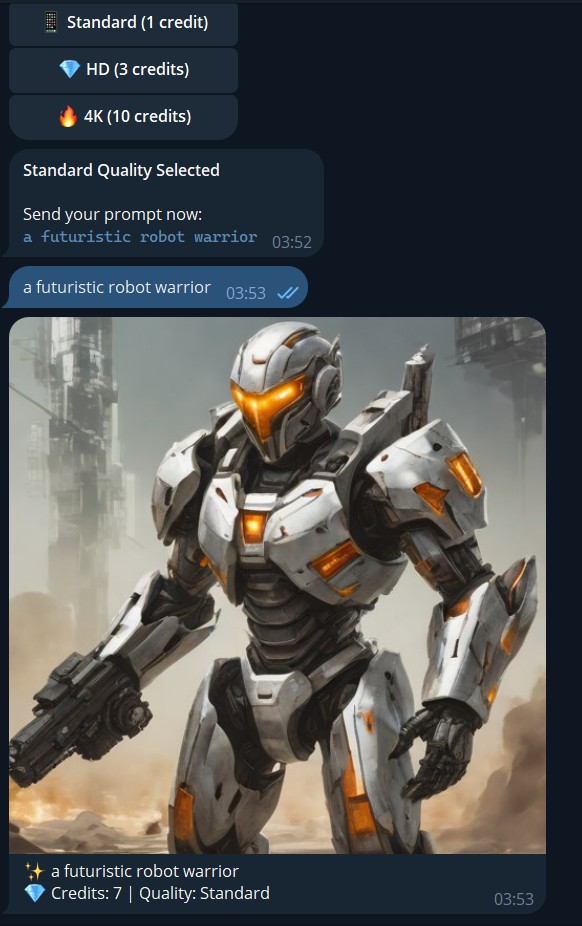

Sample Output

Prompt: "a futuristic robot warrior" | ⚡ 4 seconds | 📱 Standard Quality (512x512)

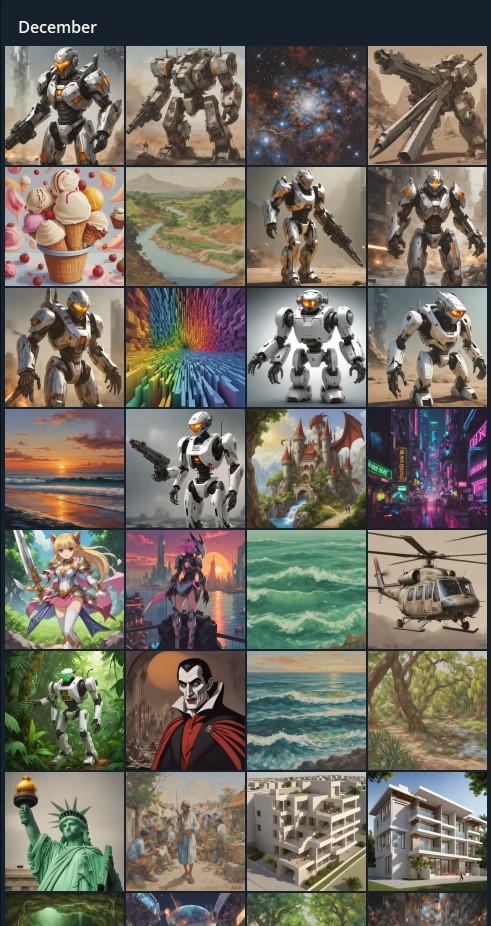

30+ Diverse Outputs

Robots, mechs, landscapes, anime characters, cyberpunk scenes, nature, architecture, abstract art

📸 Visual Proof: Screenshots show actual production bot with working payment system, credit management, and real-time L40 GPU status. All images generated in 4 seconds using SDXL Turbo model.

🚧 Challenges & Solutions

Challenge 1: Docker conflicts on VPS

✅ Solution: Deployed Python directly without containerization

💡 Learning: Sometimes simple solutions work better than complex ones

Challenge 2: Secure API communication between Railway and VPS

✅ Solution: Ngrok tunnel with environment variable URL management

💡 Learning: Environment variables protect sensitive endpoints

Challenge 3: 35 deployment iterations to production

✅ Solution: Systematic debugging of timeouts, payload limits, connection drops

💡 Learning: Persistence and methodical error tracking leads to success

Challenge 4: Payment system integration

✅ Solution: Telegram Stars API with invoice generation and webhook handling

💡 Learning: Platform-native payment systems reduce friction

📊 Results & Impact

35Deployment Iterations

4 secondsGeneration Time

10+ (family + friends)Users Tested

30+ diverse outputsImages Generated

⚡ Scale Capability: Designed to scale to 100+ concurrent users with queueing, rate limits, and load balancing.

💻 Code Highlights

# Key Functions from bot.py (~250 lines)

async def generate_handler(update, context):

"""Main image generation with quality tiers"""

quality = context.user_data.get('quality', 'standard')

required_credits = QUALITY_TIERS[quality]['credits']

# Credit check

if user_credits.get(user_id, 0) < required_credits:

return await show_buy_options()

# Generate with SDXL Turbo (4 seconds)

response = requests.post(

f"{GPU_API}/generate",

json={"prompt": prompt, "size": QUALITY_TIERS[quality]['size']}

)

# Deduct credits and send image

user_credits[user_id] -= required_credits

await update.message.reply_photo(photo=response.content)

async def successful_payment_callback(update, context):

"""Handle Telegram Stars payments"""

payload = update.message.successful_payment.invoice_payload

credits = int(payload.split('_')[1])

user_credits[user_id] += credits

await update.message.reply_text(

f"✅ Payment Successful!\n💎 +{credits} credits added!"

)

🔑 Key Functions

start() - User onboarding with referral trackinggenerate_handler() - Main image generation with quality selectionsuccessful_payment_callback() - Credit purchase processingbutton_handler() - Interactive menu system

🚀 What I'm Building Next

Exploring GPU SaaS infrastructure, AI automation systems, and scalable inference platforms.

Open to collaborations, technical roles, and startup opportunities.